OpenFL-XAI has been designed and implemented by the Artificial Intelligence R&D Group (UPI AI Group) at the Department of Information Engineering, University of Pisa, as part of the technical activities on “Connecting intelligence for 6G networks” carried out jointly by University of Pisa, TIM and Intel Corporation in the framework of the 6G Flagship EU project Hexa-X.

OpenFL-XAI extends the open-source framework OpenFL for providing user-friendly support to Federated Learning (FL) of explainable-by-design models, such as rule-based systems. OpenFL-XAI is now publicly available at https://github.com/Unipisa/OpenFL-XAI.

The UPI AI Group explains “OpenFL-XAI enables several data owners, possibly dislocated across different sites, to collaboratively learn an eXplainable Artificial Intelligence (XAI) model from their data while preserving their privacy. Thus, OpenFL-XAI addresses two key requirements towards trustworthiness of AI systems, namely privacy preservation and transparency.”

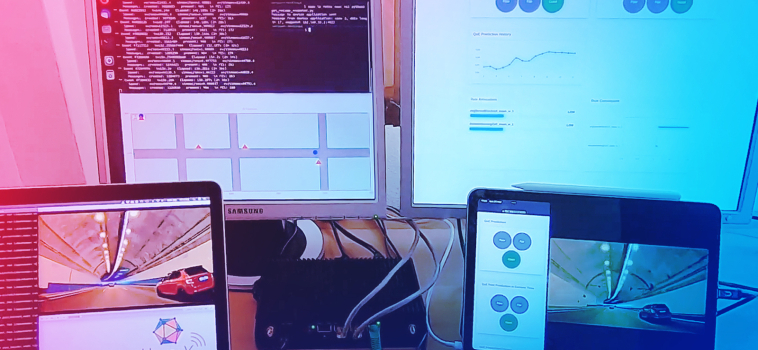

OpenFL-XAI has been exploited, along with Simu5g simulator, as core technology for building the FED-XAI (Federated Learning of eXplainable Artificial Intelligence models) demo which has been showcased in the Hexa-X booth at EuCNC & 6G Summit 2023. The FED-XAI demo showed the benefits of building XAI models in a federated manner, with a specific focus on an automotive use case, namely Tele-operated Driving (ToD), which is one of the innovative services envisioned in 6G. Specifically, FED-XAI supports the forecasting of the smoothness and quality of the video stream, which are critical for safer ToD, by leveraging the collaborative training of XAI models using data from multiple cars, while providing meaningful explanations for the predictions.

“OpenFL has proved to be highly extensible for a variety of AI use cases that are enabled by preserving of the privacy of data used to train the models”, adds Prashant Shah, Head of OpenFL Product, at Intel Corporation. “The addition of explainable model development support has enabled OpenFL to meet some of the fundamental requirements for trustworthy AI”. The UPI AI Group concludes: “OpenFL-XAI may serve as a significant facilitator in numerous safety-critical domains relying on AI applications, in which preservation of privacy and the transparency of the models are considered as imperative requisites for establishing trust in AI systems”.

Project Lead (Coordinator)

Project Lead (Coordinator)